First-time poster here, thank you for this forum of interesting ideas. I’ve long been fascinated by the history and future of personal computers and end-user programming. I’m enjoying reading the posts, exchanges, explorations.

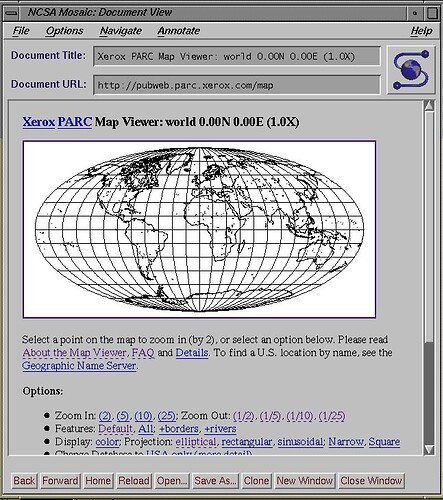

Without having any particular point to make.. Sometimes I think about the birth of the world wide web, its vision of empowering people, augmenting the human intellect; about how it’s turning out in reality; and the creative liberating potential of the medium.

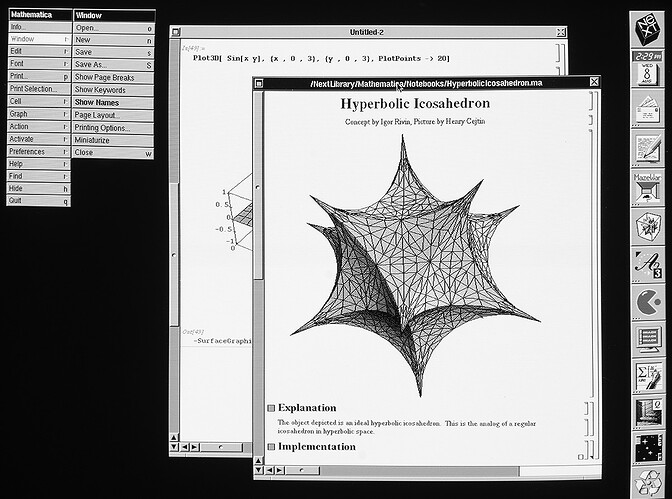

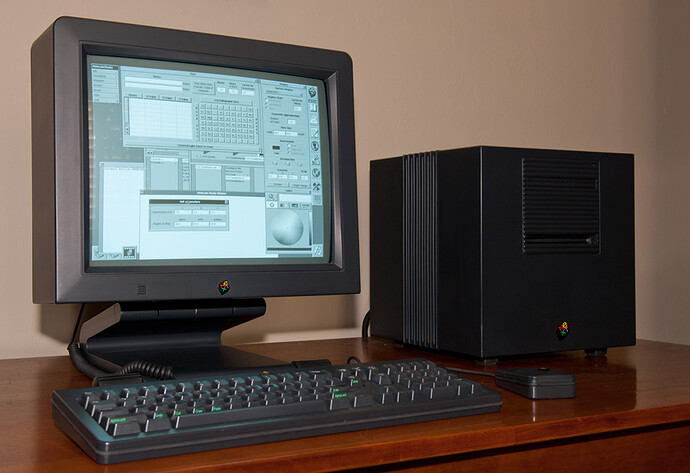

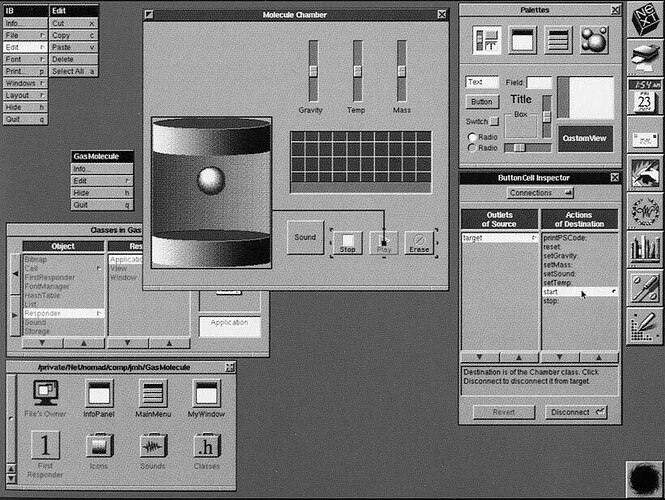

I feel like there’s a reason why the web was invented on the NeXT computer. Not to praise this particular model or brand, but to acknowledge that the conceptual design of this machine, its architecture and user interface - inherited and evolved from the explorations at Xerox PARC research lab - this personal computing environment must have played a role in the creative thinking process that led to the innovation.

NeXTSTEP had been such a productive development environment that in 1989, just a year after the NeXT Computer was revealed, Sir Tim Berners-Lee at CERN used it to create the WorldWideWeb.

During the 1997 MacWorld demo, Jobs revealed that in 1979 he had actually missed a glimpse of two other PARC technologies that were critical to the future.

One was pervasive networking between personal computers, which Xerox had with Ethernet, which it invented, in every one of its Alto workstations.

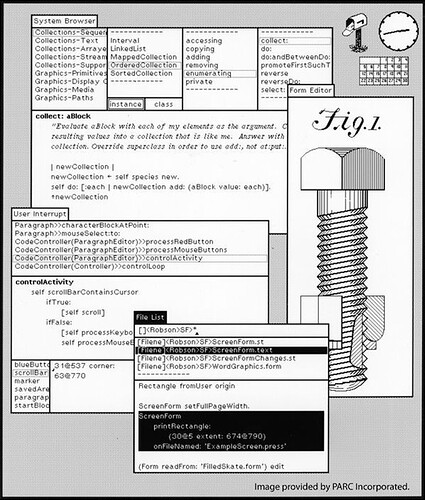

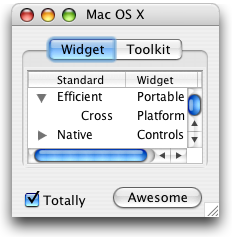

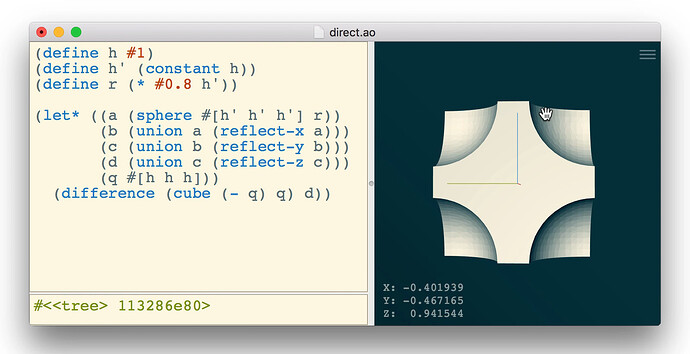

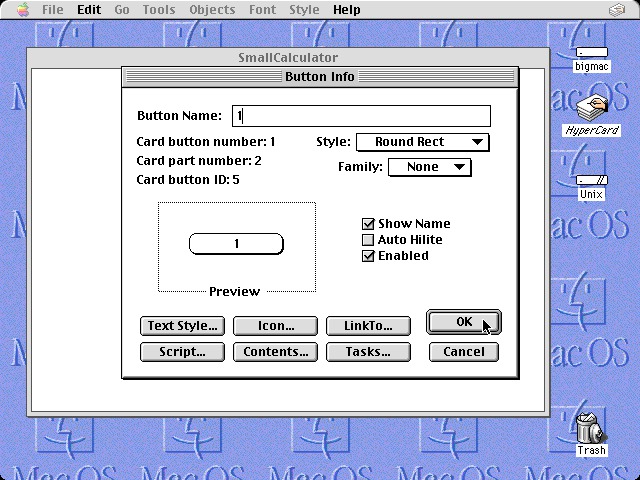

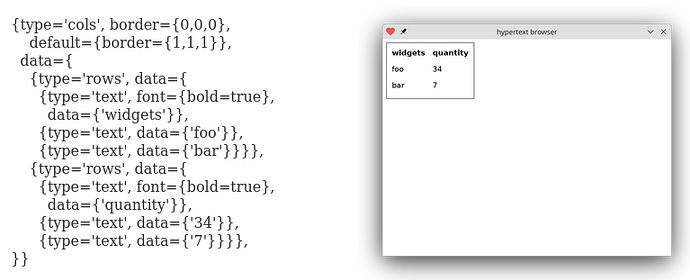

The other was a new paradigm for programming, dubbed “object-oriented programming,” by Alan Kay. Kay, working with Dan Ingalls and Adele Goldberg, designed a new programming language and development environment that embodied this paradigm, running on an Alto. Kay called the system “Smalltalk”..

Smalltalk’s development environment was graphical, with windows and menus. In fact, Smalltalk was the exact GUI that Steve Jobs saw in 1979.. During Jobs’ visit to PARC, he had been so enthralled by the surface details of the GUI that he completely missed the radical way it had been created with objects. The result was that programming graphical applications on the Macintosh would become much more difficult than doing so with Smalltalk.

With the NeXT computer, Jobs planned to fix this exact shortcoming of the Macintosh. The PARC technologies missing from the Mac would become central features on the NeXT.

NeXT computers, like other workstations, were designed to live in a permanently networked environment. Jobs called this “inter-personal computing,” though it was simply a renaming of what Xerox’s Thacker and Lampson called “personal distributed computing.” Likewise, dynamic object-oriented programming on the Smalltalk model provided the basis for all software development on NeXTSTEP.

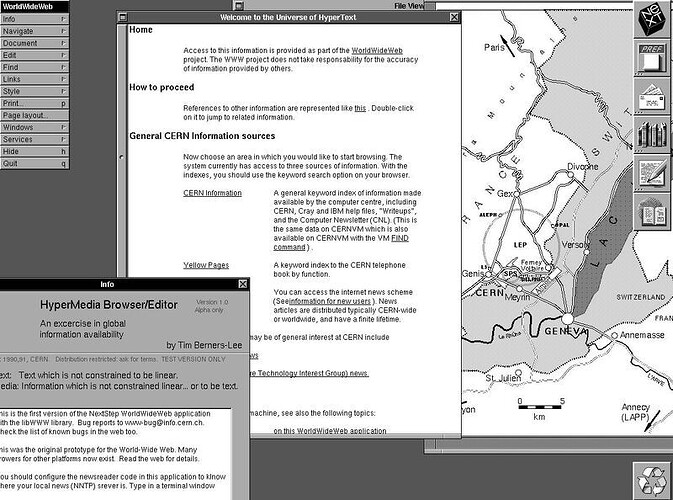

In March 1989, Tim laid out his vision for what would become the web in a document called “Information Management: A Proposal”. Believe it or not, Tim’s initial proposal was not immediately accepted. In fact, his boss at the time, Mike Sendall, noted the words “Vague but exciting” on the cover.

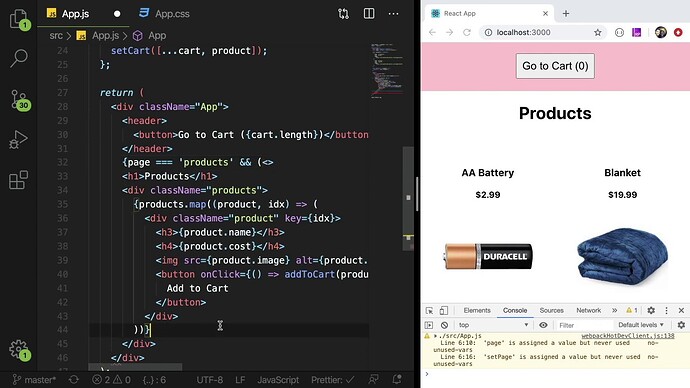

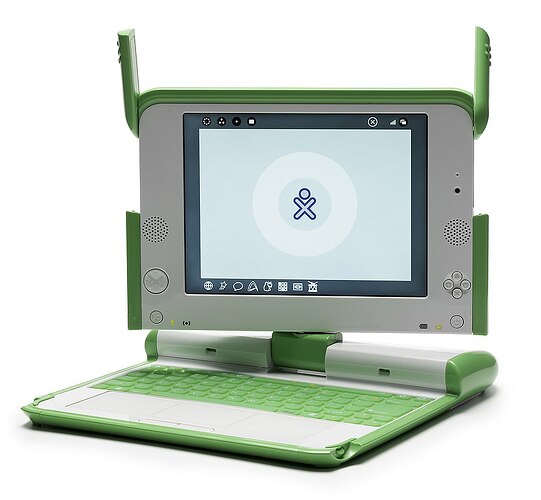

The web was never an official CERN project, but Mike managed to give Tim time to work on it in September 1990. He began work using a NeXT computer, one of Steve Jobs’ early products.

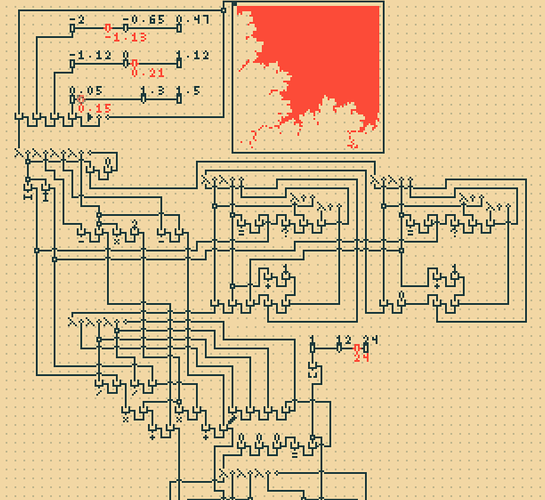

By October of 1990, Tim had written the three fundamental technologies that remain the foundation of today’s web..

- HTML: HyperText Markup Language. The markup (formatting) language for the web.

- URI: Uniform Resource Identifier. A kind of “address” that is unique and used to identify to each resource on the web. It is also commonly called a URL.

- HTTP: Hypertext Transfer Protocol. Allows for the retrieval of linked resources from across the web.

Tim also wrote the first web page editor/browser (“WorldWideWeb.app”) and the first web server (“httpd“).

It’s significant that the first web browser was also a web authoring tool.

Tim Berners-Lee wrote what would become known as WorldWideWeb on a NeXT Computer during the second half of 1990, while working for CERN. WorldWideWeb is the first web browser and also the first WYSIWYG HTML editor.

The browser was announced on the newsgroups and became available to the general public in August 1991. By this time, several others..were involved in the project.

..The team created so called “passive browsers” which do not have the ability to edit because it was hard to port this feature from the NeXT system to other operating systems.

Passive browsers.. It’s a small step from that to the “passive web”, as a kind of faster horse of television.