Hi all,

It’s nice seeing a Uxn thread on here.

I like what UXN is doing, I like the minimalist simplicity vibe, but sadly because of these limitations I don’t think it’s a potential candidate for a “100 year computer” for personal data and software preservation. But it might be worth examining for ideas.

I’d like to share Kragen’s critique of the Chifir, which advised the design of Uxn:

Bootstrapping instruction set

Chifir is inspirational. It’s the first archival virtual machine good enough to criticize. You can implement the CPU in a page of C (my version is 71 lines and took me 32 minutes to write), and then all you need is screen emulation, which took me another 40 lines and 28 minutes.†

Archival virtual machines have applications beyond archival. Independent implementations of the virtual machine defeat Karger–Thompson attacks at the virtual machine level and potentially the hardware level as well. And a stable deterministic virtual machine enables the publication of reference implementations of other virtual machines and deterministic rebuilds of derived files, such as compiler output, database indices, or raw pixels output from an image codec.

Alas, Chifir is not good enough to actually use as a general-purpose archival virtual machine, which of course is not its intended purpose. Any of the following problems would by itself be a fatal flaw (for a general-purpose archival virtual machine):

- The specification does not include test cases, so there is no way to debug a candidate implementation, and in particular to easily disambiguate the natural-language specification of the instruction set. In fact, as far as I can tell, not even the Smalltalk-72 image described in the paper has been released. This is important because when I added screen output to my implementation of Chifir, my first test program was a single-instruction infinite loop, which had a bug and also found a bug in my implementation of Chifir.

- Also, the instruction set contains unspecified behavior, including access to out-of-bounds memory addresses, execution of any of the 4294967280 undefined opcodes, division by zero, the appearance of pixels that aren’t all-zeroes or all-ones, and arguably the rounding direction of division. Unspecified behavior is a much worse problem for archival virtual machines than for the systems we’re more familiar with, because presumably the archaeologist implementing the virtual machine specification has no working implementation to compare to.

- The straightforward implementation of the instruction set using

for (;;) { ... switch(load(pc)) {...} } is unavoidably going to be pretty slow, because the instructions only manipulate 32-bit quantities. Getting reasonable performance would require a JIT-compiler implementation, which is complicated by the need to invalidate and recompile self-modifying code.

- Input is blocking; it is impossible to read keyboard input, which is the only kind of input, without blocking. That means that Chifir will, at any given time, be either unresponsive to the user or unable to execute any code until the user types a key.

- The instruction set has absurdly low code density, requiring 128 bits per instruction. This is a big problem for archival virtual machines because archival media are many orders of magnitude lower density than media customarily used for software interchange.

- Although the specification specifies the bit order of the image when stored as individual bits (as in their proposed physical medium), it does not specify an image file format as a series of bytes. In my implementation, I chose to represent the image in binary big-endian format, with the most significant byte first, but a different implementor might make a different choice, such as representing the image in a sequence of the ASCII digits “0” and “1”. Such differences would produce unnecessary incompatibility at the file level between implementations, if not at the micro-engraved disc level.

- Chifir has no provision for access to storage media; the only I/O is the screen and keyboard.

- Because Chifir has no clock, no timer interrupts, and no keyboard interrupts, there is no way within the Chifir system to regain control from an accidentally-executed infinite loop.

However, it’s worth pointing out some things Chifir got right:

- Unlike Lorie’s UVC, the Chifir virtual machine has well-defined arbitrary implementation limits and overflow behavior, so it should be straightforward for all implementations to have the same arbitrary limits. This will avoid incompatibilities where a virtual machine image works on one implementation of the virtual machine (perhaps the one used by an archivist) but fails for obscure reasons on another (perhaps the one written by an archaeologist.)

- Like BF, and unlike most virtual machines, Chifir does succeed in being implementable in an afternoon, being a “fun afternoon’s hack”. In fact, it vastly overshoots this goal: an afternoon is three to eight hours, depending on your definition, and it took me 32 minutes to implement Chifir without a display and an hour to implement Chifir with a display. (The COCOMO model used by David A. Wheeler’s ‘SLOCCount’ instead estimates it would take a week, but it’s usually wrong in such a way for such small programs.) Chifir could be about three times more complicated without overshooting the one-afternoon budget, especially if the implementor has known-good test programs to work with instead of having to concurrently write and debug their own. Maybe a complexity budget of 512 lines of C, a bit under eight pages, would be a reasonable absolute maximum.

- Chifir’s CPU lacks many edge-case-rich features present in other CPUs, which are common compatibility stumbling blocks. For example, it doesn’t have floating point, flag bits, signed multiplication and division, different memory access modes, different instruction formats, different addressing modes, for that matter any general-purpose registers, memory protection, virtual memory, interrupts, different memory spaces (as in modified Harvard architectures like the Hack CPU). Similarly, its I/O specifications are ruthlessly simple, leaving little room for compatibility problems. It’s very likely that a straightforward implementation of the specification will be, if not absolutely right, at least almost right.

- In part because Chifir lacks such corner cases, nothing stops you from writing a multithreaded program or JIT compiler in Chifir code.

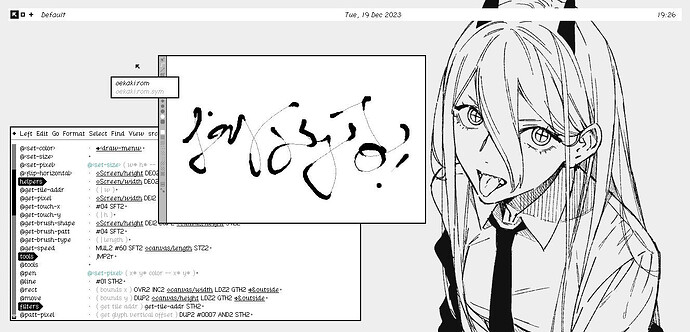

Uxn Design

I’ll put some notes down for anyone reading and interested into why the design look the way it does.

First, Uxn doesn’t have a limit of 64kb(ex: Oquonie is 500kb), it does has a bound of 64kb addressable working memory but that’s only a length of fast memory, it can read and write massive files(my wiki for example, is hundreds of pages to parse in uxn), and most implementations have at least 16 pages of RAM, even on small devices.

Uxn has no undefined behaviors, a massive test suite and a self-hosted assembler. The entire spec fits on a single piece of paper(double-sided perhaps), the cell length is specified and kept small so we can target a variety of consoles.

I wrote a devlog which goes into detail about type checking, devices and other things for anyone who’s interested to learn more.