One aspect that comes up a lot, at least in the circles I lurk, is reversibility. Being able to undo computation. Some computation models are better than others at achieving it, I think a malleable system would benefit from some form of it.

If we believe that a future computer is one that more closely follows the laws of the natural world in which nothing is created and nothing is lost. This future computer might have this particularity.

Reversible computing is a model of computation in which time is reversible.

As far as anyone knows, the laws of physics are reversible: that is, you can run them in reverse and recover any earlier state of the universe. This means that from a physical perspective, information can never be destroyed, only shuffled around. A process is said to be physically reversible if it results in no increase in physical entropy; it is isentropic.

Reversible Logic

The first condition for any deterministic device to be reversible is that its input and output be uniquely retrievable from each other. This is called logical reversibility. If, in addition to being logically reversible, a device can actually run backwards then it is called physically reversible and the second law of thermodynamics guarantees that it dissipates no heat.

Energy Consumption of Computation

Landauer’s principle holds that with any logically irreversible manipulation of information, such as the erasure of a bit or the merging of two computation paths, must be accompanied by a corresponding entropy increase in non-information-bearing degrees of freedom of the observation apparatus.

Microprocessors which are reversible at the level of their fundamental logic gates can potentially emit radically less heat than irreversible processors, and someday that may make them more economical than irreversible processors. The Pendulum microprocessor is a logically reversible computer architecture that resembles a mix of PDP-8 and RISC.

The promise of reversible computing is that the amount of heat loss for reversible architectures would be minimal for significantly large numbers of transistors. Rather than creating entropy (and thus heat) through destructive operations, a reversible architecture conserves the energy by performing other operations that preserve the system state.

An erasure of information in a closed system is always accompanied by an increase in energy consumption.

Linear Logic

A linear system excludes combinators that have a duplicative effect, as well as those that a destructive effect. A linear logic computer language avoids the need for garbage collection by explicit construction and destruction of objects. In a reversible machine, garbage collection for recycling storage can always be performed by a reversed sub-computation. The “dangling reference problem” cannot happen in a linear language because the only name occurrence for an object is used to invoke its destructor, and the destructor doesn’t return the object.

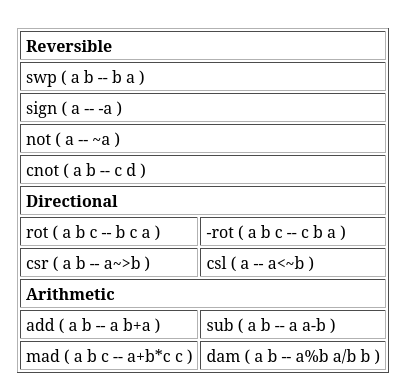

Most Forth operators take their operands from the top of the stack and return their values to the top of the stack. The language’s absence of variable names is characteristic of [combinators](file:///home/neauoire/Git/oscean/site/logic.html), the programming of Forth operators can therefore be seen as the construction of larger combinators from smaller ones. A Forth which incorporates only stack permutation operations like swap, rotate, and roll must be linear, because it has no copying or killing operators.

- NREVERSAL of Fortune, Baker on the thermodynamics and meaning of garbage collection on Lisp systems.